# Physical worker node labeling in HPE Ezmeral Container Platform

# Introduction

Discovering the node properties and advertising them through node labels can be used to control workload placement in a Kubernetes cluster. With the HPE Ezmeral Container Platform running on HPE server platforms, organizations can automate the discovery of hardware properties and use that information to schedule workloads that benefit from the different capabilities that the underlying hardware provides. Using HPE iLO and its REST or Redfish API- based discovery capabilities (proliantutils), the following properties can be discovered about the nodes:

Presence of GPUs

Underlying RAID configurations

Presence of disks by type

Persistent-memory availability

Status of CPU virtualization features

SR-IOV capabilities

CPU architecture

CPU core count

Platform information including model, iLO and BIOS versions

Memory capacity

UEFI Security settings

Health status of compute, storage, and network components

After these properties are discovered for the physical worker nodes, HPE Ezmeral Container Platform node labeling can be applied to group nodes based on the underlying features of the nodes. By default, every node will have its node name as a label.

The following properties can be used to label nodes:

Overall health status of the node.

If current status of "BIOS, Fans, Temperature Sensors, Battery, Processor, Memory, Network, and Storage" is ok, node health status is labeled as "Ok". Otherwise it will appear as "Degraded".

Overall security status of the node.

If the current status of the following BIOS configuration items (which are important for security) are as expected, then security status of the node is "Ok". Otherwise, they will be labeled as "Degraded".

NOTE

Based on the HPE Gen10 Security Reference Guide, the recommended values for the chosen parameters are as follows.

Secure Boot: Enabled

Asset tag: Locked

UEFI Shell Script Verification: Enabled

UEFI Shell Startup: Disabled

Processor AES: Enabled

For more information, refer HPE Gen10 Security Reference Guide at https://support.hpe.com/hpesc/public/docDisplay?docId=a00018320en_us.

Custom Labeling.

User defined labels (key, value) are assigned to desired physical worker nodes. Users can use these Python scripts to retrieve the properties of the underlying hardware and then decide on required labels that should be assigned to each physical worker nodes.

# High level process flow diagram for Node Labeling

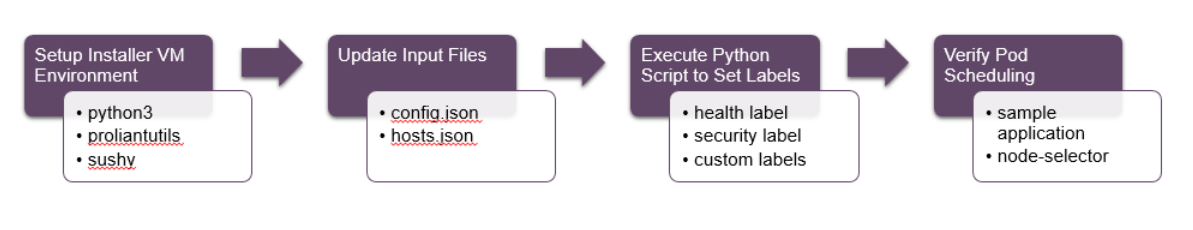

Node labeling can be broken down into 4 main phases:

- Setting up of the installer VM environment

- Updating the input files with the server information

- Execution of the Python scripts

- Verification of the pod scheduling

Figure 63 shows the high level flow for Node Labeling.

Figure 63. Process flow for Node Labeling

# Scripts for labeling worker nodes

To label the physical worker node in HPE Ezmeral Container Platform, use the repository located at the HPE Ezmeral Container Platform Solutions GitHub at https://github.com/hewlettpackard/hpe-solutions-hpecp. The Scripts for HPE Ezmeral Container Platform 5.1 Physical Worker Node labeling are available in GitHub under /scripts/physical_worker_node_labeling/. This folder contains Python scripts to automate the labeling of physical worker nodes by discovering the physical node properties in an HPE Ezmeral Container Platform 5.1 deployment and advertising them through node labels. Node labels can be targeted for deployment using node selectors.

# Contents of the repository

config.json: This file contains variables holding information about the HPE Ezmeral Container Platform specific environment variables.

- kubeconfig_path: Specifies the path of kubeconfig and this path is used by the "kubectl" command at runtime.

hosts.json: This is the encrypted inventory file which will be used by HPE Ezmeral Container Platform installer VM to reference physical worker nodes and user-defined labels.

host_fqdn: Specifies the physical worker node fully qualified domain name or IP.

ilo_ip: iLO IP of the physical worker node.

username: Username used to log in to the iLO of the physical worker node.

password: Password to log in to the iLO of the physical worker node.

custom_label_required: The value is "yes" if the user wishes to use custom labels. Otherwise, it should be set to "no".

custom_labels: Specify the custom labels key and value.

- To edit this vault file use the following command and provide the default "ansible vault" password ('changeme').

> ansible-vault edit hosts.json

NOTE

Information inside hosts.json is available in a nested JSON format, which means user can add any number of physical worker node by creating the sections as "server1, server2, server3, ...servern" and can also add any number of "custom labels" as "label1, label2, label3 and labeln".

json_parser.py: This file contains the logic to derive value of any standalone key or nested keys from the json file.

physical_node_labelling.py: This file contains the logic to derive the physical hardware properties and label of the HPE Ezmeral Container Platform physical worker nodes based on properties and user-defined label names. To extract hardware properties, Python module "proliantutils" is used in this script.

Prerequisites

Ansible engine with Ansible 2.9.x, ansible-vault and Python 3.6.x

HPE Ezmeral Container Platform 5.1 is up and running.

The HPE Ezmeral Container Platform 5.1 must have physical worker node(s) to use the "Node labeling" functionality.

Ensure there is connectivity to the iLO IP's of the physical node servers (ping the iLO's and check if they are reachable, because the script needs to talk to the iLO to retrieve information of the servers)

The scripts under this repository need to be run from the installer machine with the Python Virtual Environment setup as mentioned in the Installer Machine section of the Deployment Guide.

Ensure that the kubectl tool is available in the path along with the kubeconfig file of the cluster in $HOME/.kube/ directory (eg /root/.kube/config)

The kubeconfig file can be obtained in two ways:

- From the HPE Ezmeral Container Platform GUI - > Navigate to the Clusters section -> Download Admin Kubeconfig from the options available on the cluster.

- Copying the config file from the master node from the $HOME/.kube/ location on to the installer machine.

Ensure that the kubeconfig file is named as 'config' and placed in the $HOME/.kube/ location( eg. /root/.kube/config) of the installer machine.

Python module "proliantutils" is required

"proliantutils" is a set of utility libraries for interfacing and managing various components (like iLO) for HPE Proliant Servers.

Use the following command to install proliantutils

> pip3 install proliantutils==2.9.2- Verify the version of proliantutils

> pip3 freeze | grep proliantutils- Output:

proliantutils==2.9.2Install the "sushy" python library. In case "sushy" module is already installed, please make sure its version is 3.0.0

- Use the following command to install sushy module.

> pip3 install sushy==3.0.0- Verify the version of proliantutils

> pip freeze | grep sushy- Output:

sushy==3.0.0

NOTE

Master nodes have kubectl tool and kubeconfig file by default, hence the scripts can also be run from the master nodes after setting up the python environment, installing the required modules and setting up of the repositories.

# Executing the playbooks

Login to the installer VM.

Activate the Python 3 virtual environment as mentioned in the section Installer machine in this document.

Execute the following command and navigate to the directory physical-worker labeling.

> cd BASE_DIR/platform/physical_worker_node_labeling/NOTE

BASE_DIR is defined and set in installer machine section in deployment guide

Update the config.json and hosts.json files with appropriate values.

> vi config.json > ansible-vault edit hosts.jsonAfter the files mentioned in step 4 are updated, execute the script using the following command.

> python physical_node_labelling.pyThe user will be prompted to enter the ansible vault password/key. This credential is the default "ansible vault" password that is 'changeme'.

# Enter key for encrypted variables:The installation user will see the output similar to the following.

1: Get the physical worker node details that user wishes to configure. 2: Get current health status of the physical worker node 3: Get security parameters of the physical worker node 4: Label the physical worker with health status 5: Label the physical worker with security status 6: Custom labels 7: Display current labels on the node 8: Quit Enter the choice number:Select option 1 to retrieve the physical worker node details. The output is similar to the following.

Enter the choice number: 1 {'server1': {'host_fqdn': 'pworkerphysical2.pranav.twentynet.local', 'ilo_ip': '10.0.3.64', 'username': 'admin', 'password': 'admin123', 'custom_label_required': 'yes', 'custom_labels': {'label1': {'label_name': 'RESOURCES', 'label_val': 'MAX'}, 'label2': {'label_name': 'NODEAFFINITY', 'label_val': 'TEST'}}}}Select option 2 to retrieve the current health status of the physical worker node. The output is similar to the following.

Enter the choice number: 2 {'pworkerphysical2.pranav.twentynet.local': 'OK'}Select option 3 to retrieve the security parameters of the physical worker node. The output is similar to the following.

Enter the choice number: 3 {'pworkerphysical2.pranav.twentynet.local': 'Degraded'}Select option 4 to label the physical worker with its current hardware health status. The output is similar to the following.

Enter the choice number: 4 NAME STATUS ROLES AGE VERSION LABELS pworkerphysical2.pranav.twentynet.local Ready worker 3d1h v1.17.4 beta.Kubernetes.io/arch=amd64, beta.Kubernetes.io/os=linux,health=OK,hpe.com/compute=true,hpe.com/dataplatform=false, hpe.com/exclusivecluster=none,hpe.com/usenode=true,Kubernetes.io/arch=amd64, Kubernetes.io/hostname=pworkerphysical2.pranav.twentynet.local,Kubernetes.io/os=linux, node-role.Kubernetes.io/worker= Verified - Label health=OK is added to the node pworkerphysical2.pranav.twentynet.local b''Select option 5 to label the physical worker with security status. The output is similar to the following.

Enter the choice number: 5 NAME STATUS ROLES AGE VERSION LABELS pworkerphysical2.pranav.twentynet.local Ready worker 3d1h v1.17.4 beta.Kubernetes.io/arch=amd64, beta.Kubernetes.io/os=linux,health=OK,hpe.com/compute=true,hpe.com/dataplatform=false, hpe.com/exclusivecluster=none,hpe.com/usenode=true,Kubernetes.io/arch=amd64, Kubernetes.io/hostname=pworkerphysical2.pranav.twentynet.local,Kubernetes.io/os=linux, node-role.Kubernetes.io/worker=,security=Degraded Verified - Label security=Degraded is added to the node pworkerphysical2.pranav.twentynet.local b''Select option 6 to define custom labels. The output is similar to the following.

Enter the choice number: 6 NAME STATUS ROLES AGE VERSION LABELS pworkerphysical2.pranav.twentynet.local Ready worker 3d2h v1.17.4 RESOURCES=MAX, beta.Kubernetes.io/arch=amd64,beta.Kubernetes.io/os=linux,health=OK,hpe.com/compute=true, hpe.com/dataplatform=false,hpe.com/exclusivecluster=none,hpe.com/usenode=true,Kubernetes.io/arch=amd64, Kubernetes.io/hostname=pworkerphysical2.pranav.twentynet.local,Kubernetes.io/os=linux, node-role.Kubernetes.io/worker=,security=Degraded Verified - Label RESOURCES=MAX is added the node pworkerphysical2.pranav.twentynet.local NAME STATUS ROLES AGE VERSION LABELS pworkerphysical2.pranav.twentynet.local Ready worker 3d2h v1.17.4 NODEAFFINITY=TEST,RESOURCES=MAX, beta.Kubernetes.io/arch=amd64,beta.Kubernetes.io/os=linux,health=OK,hpe.com/compute=true, hpe.com/dataplatform=false,hpe.com/exclusivecluster=none,hpe.com/usenode=true,Kubernetes.io/arch=amd64, Kubernetes.io/hostname=pworkerphysical2.pranav.twentynet.local,Kubernetes.io/os=linux, node-role.Kubernetes.io/worker=,security=Degraded Verified - Label NODEAFFINITY=TEST is added the node pworkerphysical2.pranav.twentynet.local b''Select option 7 to display current labels on the node. The output is similar to the following.

Enter the choice number: 7 NAME STATUS ROLES AGE VERSION LABELS pworkerphysical2.pranav.twentynet.local Ready worker 3d2h v1.17.4 NODEAFFINITY=TEST,RESOURCES=MAX, beta.Kubernetes.io/arch=amd64,beta.Kubernetes.io/os=linux,health=OK,hpe.com/compute=true, hpe.com/dataplatform=false,hpe.com/exclusivecluster=none,hpe.com/usenode=true,Kubernetes.io/arch=amd64, Kubernetes.io/hostname=pworkerphysical2.pranav.twentynet.local,Kubernetes.io/os=linux, node-role.Kubernetes.io/worker=,security=DegradedSelect option 8 to quit the script. The output is shown as follows.

Enter the choice number: 8 Exiting!!

# Verify scheduling of pods using NodeSelector

Execute the following command to get all the nodes in the HPE Ezmeral Container Platform installation.

> kubectl get nodesThe output is similar to the following.

NAME STATUS ROLES AGE VERSION pmaster.pranav.twentynet.local Ready master 17d v1.17.4 pworker1.pranav.twentynet.local Ready worker 17d v1.17.4 pworker2.pranav.twentynet.local Ready worker 17d v1.17.4 pworker3.pranav.twentynet.local Ready worker 17d v1.17.4 pworkerphysical2.pranav.twentynet.local Ready worker 3d2h v1.17.4 sumslesf1b1.twentynet.local Ready worker 3d20h v1.17.4NOTE

Node “pworkerphysical2.pranav.twentynet.local” and "sumslesf1b1.twentynet.local" are the physical worker nodes and other worker nodes are virtual machines.

Execute the following command to see the labels of all the nodes.

> kubectl get nodes --show-labelsThe output is similar to the following.

NAME STATUS ROLES AGE VERSION LABELS pmaster.pranav.twentynet.local Ready master 17d v1.17.4 beta.Kubernetes.io/arch=amd64, beta.Kubernetes.io/os=linux,Kubernetes.io/arch=amd64, Kubernetes.io/hostname=pmaster.pranav.twentynet.local,Kubernetes.io/os=linux, node-role.Kubernetes.io/master= pworker1.pranav.twentynet.local Ready worker 17d v1.17.4 beta.Kubernetes.io/arch=amd64, beta.Kubernetes.io/os=linux,hpe.com/compute=true,hpe.com/dataplatform=false, hpe.com/exclusivecluster=none,hpe.com/usenode=true,Kubernetes.io/arch=amd64, Kubernetes.io/hostname=pworker1.pranav.twentynet.local,Kubernetes.io/os=linux, node-role.Kubernetes.io/worker= pworker2.pranav.twentynet.local Ready worker 17d v1.17.4 beta.Kubernetes.io/arch=amd64, beta.Kubernetes.io/os=linux,hpe.com/compute=true,hpe.com/dataplatform=false, hpe.com/exclusivecluster=none,hpe.com/usenode=true,Kubernetes.io/arch=amd64, Kubernetes.io/hostname=pworker2.pranav.twentynet.local,Kubernetes.io/os=linux, node-role.Kubernetes.io/worker= pworker3.pranav.twentynet.local Ready worker 17d v1.17.4 beta.Kubernetes.io/arch=amd64, beta.Kubernetes.io/os=linux,hpe.com/compute=true,hpe.com/dataplatform=false, hpe.com/exclusivecluster=none,hpe.com/usenode=true,Kubernetes.io/arch=amd64, Kubernetes.io/hostname=pworker3.pranav.twentynet.local,Kubernetes.io/os=linux, node-role.Kubernetes.io/worker= pworkerphysical2.pranav.twentynet.local Ready worker 3d2h v1.17.4 NODEAFFINITY=TEST,RESOURCES=MAX, beta.Kubernetes.io/arch=amd64,beta.Kubernetes.io/os=linux,health=OK,hpe.com/compute=true, hpe.com/dataplatform=false,hpe.com/exclusivecluster=none,hpe.com/usenode=true, Kubernetes.io/arch=amd64,Kubernetes.io/hostname=pworkerphysical2.pranav.twentynet.local, Kubernetes.io/os=linux,node-role.Kubernetes.io/worker=,security=Degraded sumslesf1b1.twentynet.local Ready worker 3d20h v1.17.4 HPECP=EZMERAL,PRANAV=NODE_LABELLING, beta.Kubernetes.io/arch=amd64,beta.Kubernetes.io/os=linux,health=Degraded, hpe.com/compute=true,hpe.com/dataplatform=false,hpe.com/exclusivecluster=none, hpe.com/usenode=true,Kubernetes.io/arch=amd64,Kubernetes.io/hostname=sumslesf1b1.twentynet.local, Kubernetes.io/os=linux,node-role.Kubernetes.io/worker=,security=DegradedExecute the following commands to create a new application pod in the default namespace.

> vi nginx.yamlFill up the contents of the yaml file as follows:

apiVersion: v1 kind: Pod metadata: name: nginx labels: env: test spec: containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent nodeSelector: health: OK> kubectl create -f nginx.yamlThe output is similar to the following.

pod/nginx createdNOTE

Here the nodeSelector parameter selects the node that matches the label "health=OK" and deploys the pod on that node.

In this case the node with label "health=OK" is "pworkerphysical2.pranav.twentynet.local"

Execute the following command to get the pods for the newly created application.

> kubectl get pods -o wideThe output is similar to the following.

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx 1/1 Running 0 3d 10.192.2.131 pworkerphysical2.pranav.twentynet.local <none> <none>NOTE

Here we see the pod is deployed on the node "pworkerphysical2.pranav.twentynet.local" as expected since the node has the label "health=OK"

Execute the following command to describe the application pod.

> kubectl describe pod nginxThe output is similar to the following.

Name: nginx Namespace: default Priority: 0 Node: pworkerphysical2.pranav.twentynet.local/20.0.15.12 Start Time: Fri, 31 Jul 2020 08:16:38 -0400 Labels: env=test Annotations: cni.projectcalico.org/podIP: 10.192.2.131/32 Kubernetes.io/psp: 00-privileged Status: Running IP: 10.192.2.131 IPs: IP: 10.192.2.131 Containers: nginx: Container ID: docker://f36bf7b0c237431cfdbde575f3f825a0c49f73dc821d34a31c1bcd1c9e13fa02 Image: nginx Image ID: docker-pullable://nginx@sha256:0e188877aa60537d1a1c6484b8c3929cfe09988145327ee47e8e91ddf6f76f5c Port: <none> Host Port: <none> State: Running Started: Fri, 31 Jul 2020 08:16:39 -0400 Ready: True Restart Count: 0 Environment: <none> Mounts: /var/run/secrets/Kubernetes.io/serviceaccount from default-token-qz4zk (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-qz4zk: Type: Secret (a volume populated by a Secret) SecretName: default-token-qz4zk Optional: false QoS Class: BestEffort Node-Selectors: health=OK Tolerations: node.Kubernetes.io/not-ready:NoExecute for 300s node.Kubernetes.io/unreachable:NoExecute for 300s Events: <none>NOTE

Here we see the Node-Selectors key with "health=OK" label.

NOTE

These scripts have been tested on HPE Ezmeral Container Platform 5.1 with the following configuration parameters:

Worker nodes are running SLESOS as the operating system

Installer VM OS Version: CentOS 7.6

Python: 3.6.9

proliantutils: 2.9.2

sushy: 3.0.0